Project 1:Real estate prediction using neural networks, polynomial regression, and ridge regression

library(reticulate)

use_python("/Users/gregorycrooks/opt/anaconda3/envs/r-reticulate/bin/python")0. Project summary

The aim of this project is to analyze real estate parameters and seeing how these affect the house of price unit per area. In parallel to showcasing an exploratory data analysis with comprehensive data visualization, various non-linear Machine Learning techniques were also implemented in the modelling process. Lastly, this project includes an executive summary of results geared towards non-technical audiences.

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import seaborn as sns1. Introduction / rationale

Our aim consists in analyzing real estate parameters and seeing how these affect the house of price unit per area. The data was collected from Sindian Dist., New Taipei City, in Taiwan. Considering the vast fluctuations in real estate due to covid, it is interesting for investors to gain additional insight as to which factors influence house prices. In this case we want to see how real estate factors could affect the house price of a unit area. This can provide additional value given that real estate data is heavily reliant on the neighborhood’s location, ability to commute, and the nearest shopping center. Linear regression, polynomial regression and neural networks will be used to analyze the dataset and conduct our subsequent analysis.

2. Data exploration

Our initial exploration of data shows that there is a total of 7 variables. Given that our research question is centered around house prices, we established the “Y house price of unit area” as our dependent variable. The 6 others (i.e. ‘No’, ‘X1 transaction date’, ‘X2 house age’,‘X3 distance to the nearest MRT station’,‘X4 number of convenience stores’, ‘X5 latitude’, ‘X6 longitude’) will be the initial explanatory variables. In parallel, there is a total of 413 observations each.

df = pd.read_csv('/Users/gregorycrooks/Desktop/Real estate.csv', na_values='?')

df.shape## (414, 8)df.describe()## No ... Y house price of unit area

## count 414.000000 ... 414.000000

## mean 207.500000 ... 37.980193

## std 119.655756 ... 13.606488

## min 1.000000 ... 7.600000

## 25% 104.250000 ... 27.700000

## 50% 207.500000 ... 38.450000

## 75% 310.750000 ... 46.600000

## max 414.000000 ... 117.500000

##

## [8 rows x 8 columns]print(df.isnull().sum())## No 0

## X1 transaction date 0

## X2 house age 0

## X3 distance to the nearest MRT station 0

## X4 number of convenience stores 0

## X5 latitude 0

## X6 longitude 0

## Y house price of unit area 0

## dtype: int642.1 Data cleaning

To better analyze our data, we look at the different types (int or float) and notice that no substantial data cleaning will be required given that the data is in the appropriate numeric format. We also verify whether there is any null data within the dataset. In every column, there is a total of 0 Nan which means that no additional wrangling is required. Subsequently, we print a random sample of our dataset to further examine the state of the variables but no particular anomaly is detected.

pd.set_option('display.max_rows', df.shape[0]+1)

del df['No']

print(df.dtypes)## X1 transaction date float64

## X2 house age float64

## X3 distance to the nearest MRT station float64

## X4 number of convenience stores int64

## X5 latitude float64

## X6 longitude float64

## Y house price of unit area float64

## dtype: objectdf.sample(10)## X1 transaction date ... Y house price of unit area

## 343 2013.000 ... 46.6

## 34 2012.750 ... 55.1

## 305 2013.083 ... 55.0

## 277 2013.417 ... 27.7

## 19 2012.667 ... 47.7

## 36 2012.917 ... 22.9

## 73 2013.167 ... 20.0

## 139 2012.667 ... 42.5

## 274 2013.167 ... 41.0

## 366 2012.750 ... 24.8

##

## [10 rows x 7 columns]2.2 Removing outliers and unnecessary variables

To make sure that the data is accurate we also look for outliers in our analysis and remove variables which are irrelevant.

We notice that there are a lot of outliers for the ’distance to the nearest MRT station variable. However, no particular anomaly is detected from the this variable given that it is not unrealistic for a real estate property to be far awar from a MRT station (even if it is 5-6 km or so). Given their geographical properties we sought to keep longitude and latitude given that they might bring insighful information with regards to our analysis. Indeed, price of houses is heavily dependent on location which might be an insightful indicator for our data.

We have a total of 6 independent variables for our analysis of the house pricing per unit: transaction data, house age, distance to the nearest MRT station, the number of convenience stores, longitude, and latitude.

sns.boxplot(x = df['X3 distance to the nearest MRT station']).set(xlabel=''

, title = 'Boxplot 1: Distance to the nearest MRT station')sns.boxplot(x = df['Y house price of unit area']).set(xlabel=''

, title = 'Boxplot 2: House price of unit area')2.3 Relationship between variables

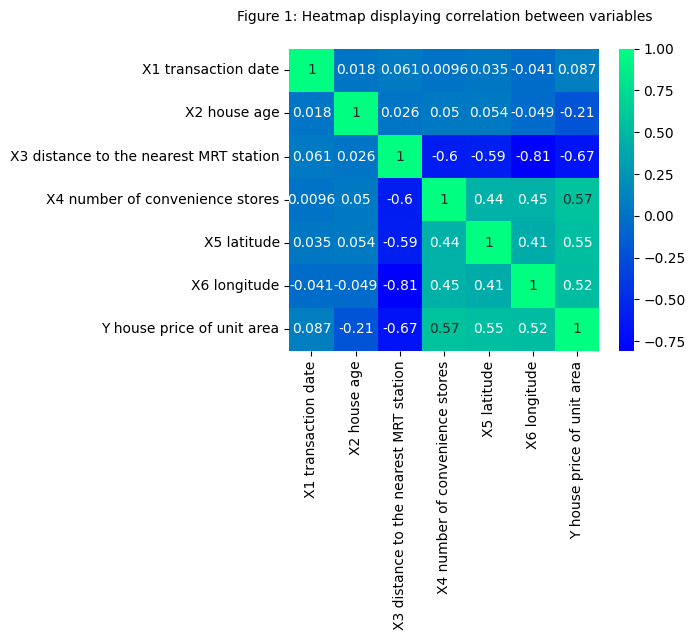

On the account of further statistical analysis, we look at the correlation and multicollinearity between variables by using the heatmat function from the seaborn package. We found a moderately strong positive correlation between the price of unit area and the longitude, the latitude, and the number of convenience stores. We also find a strong negative correlation between the distance to the nearest MRT station and the house of price of unit area. Finally, we find that the distance to the nearest MRT station proves to have a very strong negative correlation with the longitude, and a strong negative correlation with the number of convenience stores as well latitude. Nevertheless, there are some limitations to the heat map. Since latitude and longitude are interrelated geographical measures, their individual values do not provide much information. As such, our scatterplots will display how both of these combined can provide insightful information with regards to geographical clusters.

plt.figure(figsize=(5, 4), dpi=100)

ax = sns.heatmap(df.corr(), annot=True,cmap='winter')

ax.set_title('Figure 1: Heatmap displaying correlation between variables',

pad = 20, fontsize = 10)

To analyze the distribution for our depedent variable, we plot a histogram, which shows a mild right-skewed distribution. We therefore notice that the most frequent price per unit area is between 35 and 45.

plt.figure(figsize=(5, 4), dpi=100)

ax = sns.displot(df['Y house price of unit area'],kde=True,bins=20, aspect=2).set(xlabel = 'Price of unit area',

title = "Histogram 1: Normal distribution of dependent variable")

In accordance with the correlation analysis found in the heat map, we interested in further examining variables which show a significant correlation with the house pricing. As such, we create scatterplots to analyze their relationships.

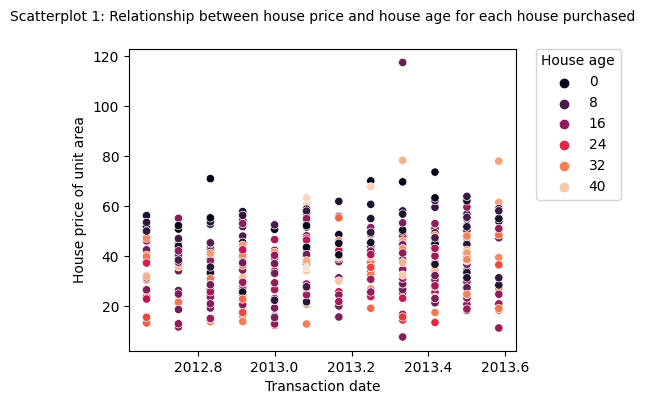

Scatterplot 1:

Scatterplot 1 follows the results displayed in the heat map between the price of unit area and the house age per transaction data (the min, 1st quantile, median, 3rd quantile, and max being displayed as interval values in the graph). Although no significant correlation is found in the scatterplot, we can visualise a very mild increase in price if the transaction date is between 2013.4 and 2013.6. While 12 months is not significant to determine this phenomenon, external factors such inflation or changes in the housing market could explain this.

plt.figure(figsize=(5, 4), dpi=100)

ax = sns.scatterplot(data=df, y=df['Y house price of unit area'], x=df['X1 transaction date'] , hue= 'X2 house age', palette="rocket")

ax.set(xlabel = 'Transaction date', ylabel = "House price of unit area")

ax.set_title("Scatterplot 1: Relationship between house price and house age for each house purchased",

pad = 20, fontsize = 10)

plt.legend(title='House age', bbox_to_anchor=(1.05, 1), loc=2, borderaxespad=0.,

fontsize = 10)

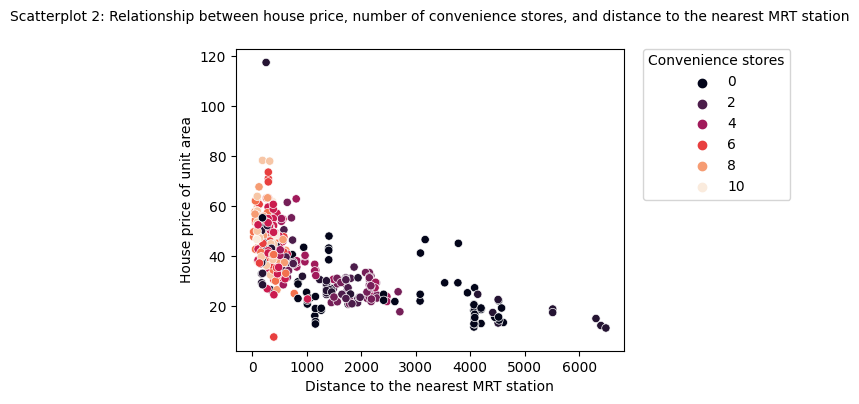

Scatterplot 2:

Scatterplot 2 shows the relationship between the house price of unit area and the number of convenience stores, and the distance to the nearest MRT starion. The graph concides with the correlation analysis from the heat map given that the closer distance to the nearest MRT station correlates with more convenience stores and an increase in house price of unit area. More specifically, the graph shows that houses which have a distance of up to 2500 meters to the nearest MRT station will have a significally higher price of unit area and 1 or more convenience stores. Starting from a distance of approximately 2500 meters from the nearest MRT onwards, little to no convenience stores can be found.

plt.figure(figsize=(5, 4), dpi=100)

ax = sns.scatterplot(data=df, y=df['Y house price of unit area'], x=df['X3 distance to the nearest MRT station']

, hue= 'X4 number of convenience stores', palette="rocket")

ax.set(xlabel = 'Distance to the nearest MRT station', ylabel = "House price of unit area")

ax.set_title("Scatterplot 2: Relationship between house price, number of convenience stores, and distance to the nearest MRT station",

pad = 20, fontsize = 10)

plt.legend(title='Convenience stores', bbox_to_anchor=(1.05, 1), loc=2, borderaxespad=0.,

fontsize = 10)

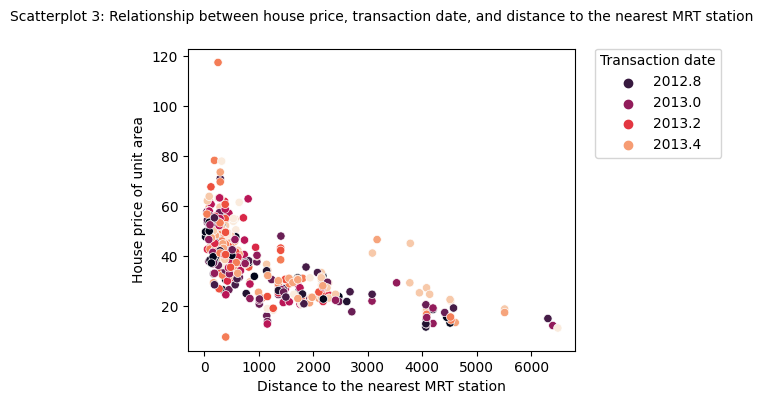

Scatterplot 3:

Scatterplot 3 inspects whether the distance between the nearest MRT station concurs with more frequent transaction dates. This graph confirms that it is the case, given that the data for the transaction date (regardless of the date of purchase), is clustered around houses whose distance to the nearest MRT station is at 2500 or less, even though this also concurs with an increase in the house price of unit area. This means that buyers will tend to buy houses which are easier to commute from even if the price is higher.

plt.figure(figsize=(5, 4), dpi=100)

ax = sns.scatterplot(data=df, y=df['Y house price of unit area'], x=df['X3 distance to the nearest MRT station'] , hue= 'X1 transaction date', palette="rocket")

ax.set(xlabel = 'Distance to the nearest MRT station', ylabel = "House price of unit area")

ax.set_title("Scatterplot 3: Relationship between house price, transaction date, and distance to the nearest MRT station",

pad = 20, fontsize = 10)

plt.legend(title='Transaction date', bbox_to_anchor=(1.05, 1), loc=2, borderaxespad=0.,

fontsize = 10)

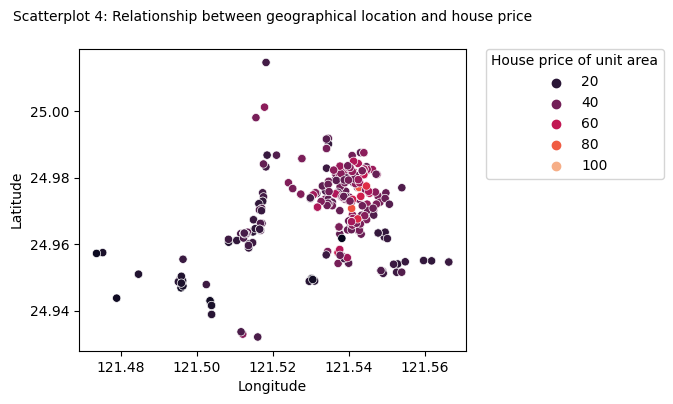

Scatterplot 4:

Scatterplot 4 determines how the location can impact the price. Upon seeing how latitude and longitude interrelate, we noticed a geographical cluster located between the longitude of 121.54 and a latitude between 24.97 / 24.98. The closer to this location, the more common it is to find houses with a house price of unit area ranging between 60 to 100. On the outskirts of this geographical concentration, the price of houses depreciates. This means that real estate properties are more valued in this neighborhood. This also indicates that this neighborhood is central within the Sindian district.

plt.figure(figsize=(5, 4), dpi=100)

ax = sns.scatterplot(data=df, y=df['X5 latitude'], x=df['X6 longitude'] , hue= 'Y house price of unit area', palette="rocket")

ax.set(xlabel = 'Longitude', ylabel = "Latitude")

ax.set_title("Scatterplot 4: Relationship between geographical location and house price",

pad = 20, fontsize = 10)

plt.legend(title='House price of unit area', bbox_to_anchor=(1.05, 1), loc=2, borderaxespad=0.,

fontsize = 10)

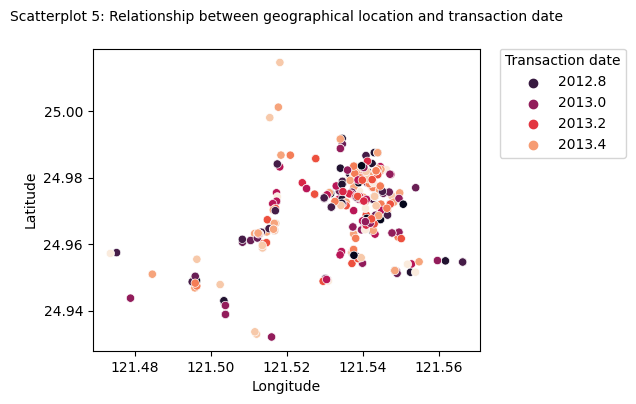

Scatterplot 5:

In scatterplot 5, we analyzed the relationship between the frequency of transactions and location. Data shows that houses which are the most commonly purchased are located in the neighborhood with the highest price of unit area. This further supports our findings from scatterplot 4 with regards to this neighborhood being a central location. Indeed, the vast majority of buyers want to buy houses located in this neighborhood, even if the price tends to be higher.

plt.figure(figsize=(5, 4), dpi=100)

ax = sns.scatterplot(data=df, y=df['X5 latitude'], x=df['X6 longitude'] , hue= 'X1 transaction date', palette="rocket")

ax.set(xlabel = 'Longitude', ylabel = "Latitude")

ax.set_title("Scatterplot 5: Relationship between geographical location and transaction date",

pad = 20, fontsize = 10)

plt.legend(title='Transaction date', bbox_to_anchor=(1.05, 1), loc=2, borderaxespad=0.,

fontsize = 10)

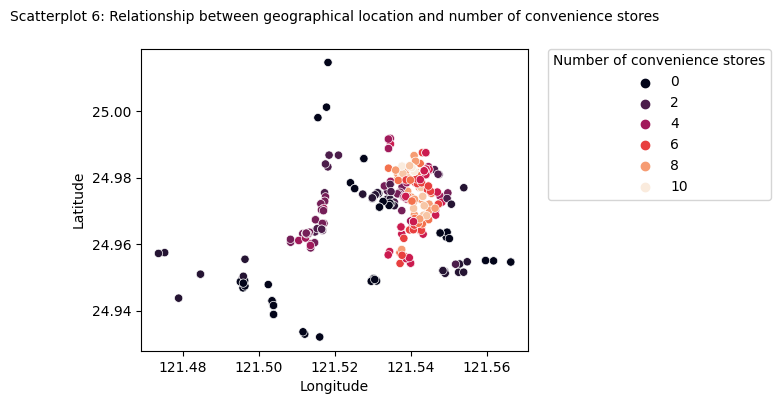

Scatterplot 6:

Scatterplot 6 shows the number of convenience stores in different neighborhoods in the Sindian Dist of New Taipei City, Taiwan. In concordance with scatterplots 4 and 5, this graph also indicates that the location which has the highest number of house purchases, the higher price of unit area, also has the highest number of convenience stores. Neighborhood on the outskirts of the city center will tend to have 0 convenience stores and are therefore less in demand than those with more convenience stores.

plt.figure(figsize=(5, 4), dpi=100)

ax = sns.scatterplot(data=df, y=df['X5 latitude'], x=df['X6 longitude'] , hue= 'X4 number of convenience stores', palette="rocket")

ax.set(xlabel = 'Longitude', ylabel = "Latitude")

ax.set_title("Scatterplot 6: Relationship between geographical location and number of convenience stores",

pad = 20, fontsize = 10)

plt.legend(title='Number of convenience stores', bbox_to_anchor=(1.05, 1), loc=2, borderaxespad=0.,

fontsize = 10)

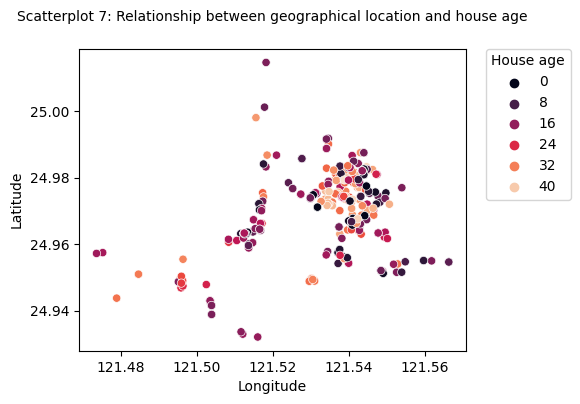

Scatterplot 7:

Scatterplot 7 shows that the city center concentrates most of the oldest houses. While there are houses which have been built very recently in the same neighborhood, the median for house age is approximately 16. That is, out of the 40 year old houses, a much higher proportion of them is centered around this location, and it is much more common to find houses which have been built in the last 16 years outside of the city center. This implies that it is a residential neighborhood in which most of the residents are families.

plt.figure(figsize=(5, 4), dpi=100)

ax = sns.scatterplot(data=df, y=df['X5 latitude'], x=df['X6 longitude'] , hue= 'X2 house age', palette="rocket")

ax.set(xlabel = 'Longitude', ylabel = "Latitude")

ax.set_title("Scatterplot 7: Relationship between geographical location and house age",

pad = 20, fontsize = 10)

plt.legend(title='House age', bbox_to_anchor=(1.05, 1), loc=2, borderaxespad=0.,

fontsize = 10)

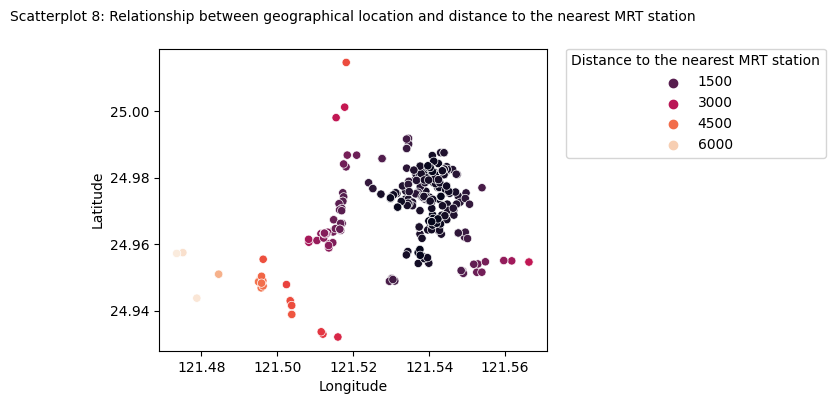

Scatterplot 8:

The 8th scatterplot displays the distance to the nearest MRT station for different geographical locations. The graph very strongly indicates how most of the houses in the city center are closer to the nearest MRT station. This also explains how the demand and price of unit area is higher, given that it is easier to commute to and from this neighborhood. Indeed, the vast majority of houses are 1000 meters or less to a MRT station, whereas houses outside of the central location will increasingly stray away from the nearest station. For instance, residents located in the area whose longitude is 121.48 and latitude is 24.96 are 6 km away from the nearest station. This means that they will take much longer to commute.

plt.figure(figsize=(5, 4), dpi=100)

ax = sns.scatterplot(data=df, y=df['X5 latitude'], x=df['X6 longitude'], hue= 'X3 distance to the nearest MRT station', palette="rocket" )

ax.set(xlabel = 'Longitude', ylabel = "Latitude")

ax.set_title("Scatterplot 8: Relationship between geographical location and distance to the nearest MRT station",

pad = 20, fontsize = 10)

plt.legend(title='Distance to the nearest MRT station', bbox_to_anchor=(1.05, 1), loc=2, borderaxespad=0.,

fontsize = 10)

Lastly, it is necessary to point issues of collinearity. Results strongly suggest that the closer to a MRT station, the more the number of convenience stores can be found. Out of both explanatory variables, we notice that the distance to the nearest MRT station has a stronger relationship. As such, we did not include the number of convenience stores in our resampling. We did not include latitude and longitude either given that they are storngly interrelated. There are now 3 explanatory variables: transaction date, house age, and distance to the nearest MRT station.

3. Data modelling

3.1 Data split

Our first step to detect overfitting is to split the data into a train and testing set (⅔ to ⅓ ) which we then pre-processed by standardizing features, removing the mean, and subsequently scaled to unit variance. We also set a seed to ensure reproducibility. Moreover, to assess the robustness of our model, we used the MAE (mean absolute error) as a metric. The MAE looks at the difference between the prediction of an observation and the true value of that observation.

from scipy import stats

df_changed = df.drop(columns = ["X4 number of convenience stores",

"X5 latitude",

"X6 longitude",

'Y house price of unit area'])

X_values = df_changed.values

X_values## array([[2012.917 , 32. , 84.87882],

## [2012.917 , 19.5 , 306.5947 ],

## [2013.583 , 13.3 , 561.9845 ],

## ...,

## [2013.25 , 18.8 , 390.9696 ],

## [2013. , 8.1 , 104.8101 ],

## [2013.5 , 6.5 , 90.45606]])y = df['Y house price of unit area'].valuesfrom sklearn.model_selection import train_test_split

X_train_raw, X_test_raw, y_train, y_test = train_test_split(X_values, y, test_size=0.33, random_state=2022)# for linear regression the data needs to be standard scaled

from sklearn.preprocessing import StandardScaler

SS = StandardScaler()

SS.fit(X_train_raw)## StandardScaler()X_train = SS.transform(X_train_raw)

X_test = SS.transform(X_test_raw)3.2 Initial linear regression

Firstly, we ran standard linear regression (i.e. degrees 1 polynomial). This was to set a baseline to then compare with polynomial regress. We first and foremost notice that there is no substantial overfitting in our results given the little difference between our training and testing set. We find that the baseline absolute error with standard linear regression is about 7.4.

from sklearn.linear_model import LinearRegression

model = LinearRegression(fit_intercept=True)

model.fit(X_train, y_train)## LinearRegression()def mae(predictions, y_test):

return np.mean(np.abs(predictions-y_test))LR_train_mae = mae(model.predict(X_train), y_train)

LR_test_mae = mae(model.predict(X_test), y_test)print("LR train mae: {}".format(LR_train_mae))## LR train mae: 6.521571756338549print("LR test mae: {}".format(LR_test_mae))## LR test mae: 7.3845968935223323.3 Poly features of best fit - regression

We then create polynomial features and train a linear regression on those polynomial features (i.e. polynomial regression). We trial a number of different degrees. Results from this show that a degree has the lowest test error, with the test set having the lower mean absolute error (5.74). This polynomial degree also has the lowest overfitting given that it has the lowest gap between the train and testing sets.

from sklearn.linear_model import Ridge

from sklearn.preprocessing import PolynomialFeatures

n_values = range(1, 8)

train_errors = []

test_errors = []

for n in n_values:

poly = PolynomialFeatures(degree=n, interaction_only = False)

X_poly_train = poly.fit_transform(X_train)

X_poly_test = poly.transform(X_test)

model = LinearRegression(fit_intercept=True)

model.fit(X_poly_train, y_train)

LR_poly_train_mae = mae(model.predict(X_poly_train), y_train)

LR_poly_test_mae = mae(model.predict(X_poly_test), y_test)

train_errors.append(LR_poly_train_mae)

test_errors.append(LR_poly_test_mae)

print("N {}".format(n))

print("LR train mae: {}".format(LR_poly_train_mae))

print("LR test mae: {}".format(LR_poly_test_mae))

print("")

## LinearRegression()

## N 1

## LR train mae: 6.521571756338549

## LR test mae: 7.384596893522332

##

## LinearRegression()

## N 2

## LR train mae: 5.4197054384186245

## LR test mae: 6.142148548896064

##

## LinearRegression()

## N 3

## LR train mae: 5.257224893526242

## LR test mae: 5.746138475538864

##

## LinearRegression()

## N 4

## LR train mae: 5.078420090412917

## LR test mae: 6.072010237420974

##

## LinearRegression()

## N 5

## LR train mae: 4.593417187102522

## LR test mae: 7.629315126992115

##

## LinearRegression()

## N 6

## LR train mae: 10.218626607626353

## LR test mae: 26.1658203125

##

## LinearRegression()

## N 7

## LR train mae: 9.570644954309566

## LR test mae: 42.298952511975365plt.figure()

plt.title("Graph 3: Polynomial degree against train and test error")

plt.plot(n_values, train_errors, label="train_mae")

plt.plot(n_values, test_errors, label="test_mae")

plt.xlabel("Polynomial degree")

plt.ylabel("Mean absolute error")

plt.legend()This shows that polynomial value of N=3 is the best (it has the

lowest mean absolute error on the test set). As n goes about 3, the test

error starts to increase whilst the trainine error stays constant. The

means it starts to overfit.

3.4 Ridge regression

from sklearn.linear_model import Ridge

for a in np.linspace(0.01, 0.1, 10):

model = Ridge(alpha=a)

model.fit(X_train, y_train)

LR_train_mae = mae(model.predict(X_train), y_train)

LR_test_mae = mae(model.predict(X_test), y_test)

print("alpha {}".format(a))

print("Ridge train mae: {}".format(LR_train_mae))

print("Ridge test mae: {}".format(LR_test_mae))

print("")## Ridge(alpha=0.01)

## alpha 0.01

## Ridge train mae: 6.521596612634986

## Ridge test mae: 7.384567435651112

##

## Ridge(alpha=0.020000000000000004)

## alpha 0.020000000000000004

## Ridge train mae: 6.52162146722416

## Ridge test mae: 7.384537979824193

##

## Ridge(alpha=0.030000000000000006)

## alpha 0.030000000000000006

## Ridge train mae: 6.521646320106251

## Ridge test mae: 7.38450852604136

##

## Ridge(alpha=0.04000000000000001)

## alpha 0.04000000000000001

## Ridge train mae: 6.52167117128143

## Ridge test mae: 7.384479074302395

##

## Ridge(alpha=0.05000000000000001)

## alpha 0.05000000000000001

## Ridge train mae: 6.5216960207498795

## Ridge test mae: 7.384449624607088

##

## Ridge(alpha=0.06000000000000001)

## alpha 0.06000000000000001

## Ridge train mae: 6.521720868511773

## Ridge test mae: 7.3844201769552145

##

## Ridge(alpha=0.07)

## alpha 0.07

## Ridge train mae: 6.521745714567286

## Ridge test mae: 7.384390731346564

##

## Ridge(alpha=0.08)

## alpha 0.08

## Ridge train mae: 6.521770558916597

## Ridge test mae: 7.38436128778092

##

## Ridge(alpha=0.09000000000000001)

## alpha 0.09000000000000001

## Ridge train mae: 6.521795401559881

## Ridge test mae: 7.384331846258067

##

## Ridge(alpha=0.1)

## alpha 0.1

## Ridge train mae: 6.521820242497316

## Ridge test mae: 7.38430240677778653.5 Poly features - ridge regression

Ridge regression shrinks the regression coefficients, so that variables, with minor contribution to the outcome, have their coefficients close to zero. The shrinkage of the coefficients is achieved by penalizing the regression model with a penalty term.

for n in range(1, 8):

poly = PolynomialFeatures(degree=n, interaction_only = False)

X_poly_train = poly.fit_transform(X_train)

X_poly_test = poly.transform(X_test)

for a in np.linspace(0.01, 0.1, 10):

model = Ridge(alpha=a)

model.fit(X_poly_train, y_train)

LR_train_mae = mae(model.predict(X_poly_train), y_train)

LR_test_mae = mae(model.predict(X_poly_test), y_test)

print("n: {}, alpha {}".format(n, a))

print("Ridge train mae: {}".format(LR_train_mae))

print("Ridge test mae: {}".format(LR_test_mae))

print("")

## Ridge(alpha=0.01)

## n: 1, alpha 0.01

## Ridge train mae: 6.521596612634987

## Ridge test mae: 7.384567435651111

##

## Ridge(alpha=0.020000000000000004)

## n: 1, alpha 0.020000000000000004

## Ridge train mae: 6.52162146722416

## Ridge test mae: 7.384537979824193

##

## Ridge(alpha=0.030000000000000006)

## n: 1, alpha 0.030000000000000006

## Ridge train mae: 6.521646320106251

## Ridge test mae: 7.38450852604136

##

## Ridge(alpha=0.04000000000000001)

## n: 1, alpha 0.04000000000000001

## Ridge train mae: 6.52167117128143

## Ridge test mae: 7.384479074302395

##

## Ridge(alpha=0.05000000000000001)

## n: 1, alpha 0.05000000000000001

## Ridge train mae: 6.5216960207498795

## Ridge test mae: 7.384449624607087

##

## Ridge(alpha=0.06000000000000001)

## n: 1, alpha 0.06000000000000001

## Ridge train mae: 6.521720868511773

## Ridge test mae: 7.384420176955215

##

## Ridge(alpha=0.07)

## n: 1, alpha 0.07

## Ridge train mae: 6.5217457145672855

## Ridge test mae: 7.384390731346564

##

## Ridge(alpha=0.08)

## n: 1, alpha 0.08

## Ridge train mae: 6.521770558916597

## Ridge test mae: 7.384361287780919

##

## Ridge(alpha=0.09000000000000001)

## n: 1, alpha 0.09000000000000001

## Ridge train mae: 6.521795401559881

## Ridge test mae: 7.384331846258067

##

## Ridge(alpha=0.1)

## n: 1, alpha 0.1

## Ridge train mae: 6.521820242497316

## Ridge test mae: 7.3843024067777865

##

## Ridge(alpha=0.01)

## n: 2, alpha 0.01

## Ridge train mae: 5.419788015892516

## Ridge test mae: 6.14219237087354

##

## Ridge(alpha=0.020000000000000004)

## n: 2, alpha 0.020000000000000004

## Ridge train mae: 5.4198705641308536

## Ridge test mae: 6.142246438154977

##

## Ridge(alpha=0.030000000000000006)

## n: 2, alpha 0.030000000000000006

## Ridge train mae: 5.41995308314937

## Ridge test mae: 6.142316141301987

##

## Ridge(alpha=0.04000000000000001)

## n: 2, alpha 0.04000000000000001

## Ridge train mae: 5.420035572963773

## Ridge test mae: 6.142385805767498

##

## Ridge(alpha=0.05000000000000001)

## n: 2, alpha 0.05000000000000001

## Ridge train mae: 5.420118033589764

## Ridge test mae: 6.14245543157578

##

## Ridge(alpha=0.06000000000000001)

## n: 2, alpha 0.06000000000000001

## Ridge train mae: 5.420200465043034

## Ridge test mae: 6.142525018751079

##

## Ridge(alpha=0.07)

## n: 2, alpha 0.07

## Ridge train mae: 5.4202828673392585

## Ridge test mae: 6.142594567317626

##

## Ridge(alpha=0.08)

## n: 2, alpha 0.08

## Ridge train mae: 5.420365240494108

## Ridge test mae: 6.142664077299633

##

## Ridge(alpha=0.09000000000000001)

## n: 2, alpha 0.09000000000000001

## Ridge train mae: 5.420447584523234

## Ridge test mae: 6.142733548721289

##

## Ridge(alpha=0.1)

## n: 2, alpha 0.1

## Ridge train mae: 5.420529899442285

## Ridge test mae: 6.142802981606769

##

## Ridge(alpha=0.01)

## n: 3, alpha 0.01

## Ridge train mae: 5.257306460235128

## Ridge test mae: 5.746328030735457

##

## Ridge(alpha=0.020000000000000004)

## n: 3, alpha 0.020000000000000004

## Ridge train mae: 5.2573879459838935

## Ridge test mae: 5.74651728936748

##

## Ridge(alpha=0.030000000000000006)

## n: 3, alpha 0.030000000000000006

## Ridge train mae: 5.257469350923302

## Ridge test mae: 5.746706252073681

##

## Ridge(alpha=0.04000000000000001)

## n: 3, alpha 0.04000000000000001

## Ridge train mae: 5.25755067520373

## Ridge test mae: 5.746894919491035

##

## Ridge(alpha=0.05000000000000001)

## n: 3, alpha 0.05000000000000001

## Ridge train mae: 5.257631918975165

## Ridge test mae: 5.747083292254784

##

## Ridge(alpha=0.06000000000000001)

## n: 3, alpha 0.06000000000000001

## Ridge train mae: 5.2577130823871885

## Ridge test mae: 5.747271370998428

##

## Ridge(alpha=0.07)

## n: 3, alpha 0.07

## Ridge train mae: 5.257794165589003

## Ridge test mae: 5.747459156353747

##

## Ridge(alpha=0.08)

## n: 3, alpha 0.08

## Ridge train mae: 5.257875168729412

## Ridge test mae: 5.747646648950769

##

## Ridge(alpha=0.09000000000000001)

## n: 3, alpha 0.09000000000000001

## Ridge train mae: 5.2579560919568396

## Ridge test mae: 5.747833849417803

##

## Ridge(alpha=0.1)

## n: 3, alpha 0.1

## Ridge train mae: 5.258036935419317

## Ridge test mae: 5.748020758381457

##

## Ridge(alpha=0.01)

## n: 4, alpha 0.01

## Ridge train mae: 5.078453229869225

## Ridge test mae: 6.07166585425305

##

## Ridge(alpha=0.020000000000000004)

## n: 4, alpha 0.020000000000000004

## Ridge train mae: 5.078486728809414

## Ridge test mae: 6.071324703217347

##

## Ridge(alpha=0.030000000000000006)

## n: 4, alpha 0.030000000000000006

## Ridge train mae: 5.078520581945106

## Ridge test mae: 6.070986746705667

##

## Ridge(alpha=0.04000000000000001)

## n: 4, alpha 0.04000000000000001

## Ridge train mae: 5.078554784064771

## Ridge test mae: 6.070651947652118

##

## Ridge(alpha=0.05000000000000001)

## n: 4, alpha 0.05000000000000001

## Ridge train mae: 5.078589330032319

## Ridge test mae: 6.0703202695232665

##

## Ridge(alpha=0.06000000000000001)

## n: 4, alpha 0.06000000000000001

## Ridge train mae: 5.078624214785893

## Ridge test mae: 6.069991676309093

##

## Ridge(alpha=0.07)

## n: 4, alpha 0.07

## Ridge train mae: 5.078659433336498

## Ridge test mae: 6.069666132513707

##

## Ridge(alpha=0.08)

## n: 4, alpha 0.08

## Ridge train mae: 5.07869498076683

## Ridge test mae: 6.069343603146454

##

## Ridge(alpha=0.09000000000000001)

## n: 4, alpha 0.09000000000000001

## Ridge train mae: 5.078730852229972

## Ridge test mae: 6.069024053713063

##

## Ridge(alpha=0.1)

## n: 4, alpha 0.1

## Ridge train mae: 5.078767042948241

## Ridge test mae: 6.068707450207112

##

## Ridge(alpha=0.01)

## n: 5, alpha 0.01

## Ridge train mae: 4.592942321154879

## Ridge test mae: 7.6263872681781395

##

## Ridge(alpha=0.020000000000000004)

## n: 5, alpha 0.020000000000000004

## Ridge train mae: 4.592472239791051

## Ridge test mae: 7.623392592210273

##

## Ridge(alpha=0.030000000000000006)

## n: 5, alpha 0.030000000000000006

## Ridge train mae: 4.592006794810789

## Ridge test mae: 7.620335412965674

##

## Ridge(alpha=0.04000000000000001)

## n: 5, alpha 0.04000000000000001

## Ridge train mae: 4.591545846385613

## Ridge test mae: 7.617219781492997

##

## Ridge(alpha=0.05000000000000001)

## n: 5, alpha 0.05000000000000001

## Ridge train mae: 4.591089262458251

## Ridge test mae: 7.61404950461583

##

## Ridge(alpha=0.06000000000000001)

## n: 5, alpha 0.06000000000000001

## Ridge train mae: 4.590675166559448

## Ridge test mae: 7.610923137786759

##

## Ridge(alpha=0.07)

## n: 5, alpha 0.07

## Ridge train mae: 4.590284071959667

## Ridge test mae: 7.60782720216512

##

## Ridge(alpha=0.08)

## n: 5, alpha 0.08

## Ridge train mae: 4.589894872552797

## Ridge test mae: 7.604685114387745

##

## Ridge(alpha=0.09000000000000001)

## n: 5, alpha 0.09000000000000001

## Ridge train mae: 4.58950755824834

## Ridge test mae: 7.601499903464217

##

## Ridge(alpha=0.1)

## n: 5, alpha 0.1

## Ridge train mae: 4.589122119093563

## Ridge test mae: 7.598274424900914

##

## Ridge(alpha=0.01)

## n: 6, alpha 0.01

## Ridge train mae: 4.372854140732388

## Ridge test mae: 13.578451730706403

##

## Ridge(alpha=0.020000000000000004)

## n: 6, alpha 0.020000000000000004

## Ridge train mae: 4.361406078796805

## Ridge test mae: 13.063643280873213

##

## Ridge(alpha=0.030000000000000006)

## n: 6, alpha 0.030000000000000006

## Ridge train mae: 4.351787912179385

## Ridge test mae: 12.606954306842633

##

## Ridge(alpha=0.04000000000000001)

## n: 6, alpha 0.04000000000000001

## Ridge train mae: 4.343233339562473

## Ridge test mae: 12.199491457494283

##

## Ridge(alpha=0.05000000000000001)

## n: 6, alpha 0.05000000000000001

## Ridge train mae: 4.335503318596305

## Ridge test mae: 11.833373501412852

##

## Ridge(alpha=0.06000000000000001)

## n: 6, alpha 0.06000000000000001

## Ridge train mae: 4.32974017655789

## Ridge test mae: 11.50287180855749

##

## Ridge(alpha=0.07)

## n: 6, alpha 0.07

## Ridge train mae: 4.325716639441944

## Ridge test mae: 11.203357982585137

##

## Ridge(alpha=0.08)

## n: 6, alpha 0.08

## Ridge train mae: 4.322743599071541

## Ridge test mae: 10.932097376706288

##

## Ridge(alpha=0.09000000000000001)

## n: 6, alpha 0.09000000000000001

## Ridge train mae: 4.320829259063197

## Ridge test mae: 10.855601985120046

##

## Ridge(alpha=0.1)

## n: 6, alpha 0.1

## Ridge train mae: 4.3191742804859405

## Ridge test mae: 10.844328497651988

##

## Ridge(alpha=0.01)

## n: 7, alpha 0.01

## Ridge train mae: 3.6695634828980355

## Ridge test mae: 32.971535138420286

##

## Ridge(alpha=0.020000000000000004)

## n: 7, alpha 0.020000000000000004

## Ridge train mae: 3.6519236204065595

## Ridge test mae: 30.344671517825283

##

## Ridge(alpha=0.030000000000000006)

## n: 7, alpha 0.030000000000000006

## Ridge train mae: 3.6499653510706715

## Ridge test mae: 28.488463369577268

##

## Ridge(alpha=0.04000000000000001)

## n: 7, alpha 0.04000000000000001

## Ridge train mae: 3.65595485104245

## Ridge test mae: 27.13309705562507

##

## Ridge(alpha=0.05000000000000001)

## n: 7, alpha 0.05000000000000001

## Ridge train mae: 3.661490497062608

## Ridge test mae: 26.151214938327325

##

## Ridge(alpha=0.06000000000000001)

## n: 7, alpha 0.06000000000000001

## Ridge train mae: 3.6664852845603

## Ridge test mae: 25.360421728811534

##

## Ridge(alpha=0.07)

## n: 7, alpha 0.07

## Ridge train mae: 3.6723092910229034

## Ridge test mae: 24.705760260224125

##

## Ridge(alpha=0.08)

## n: 7, alpha 0.08

## Ridge train mae: 3.6797223955442577

## Ridge test mae: 24.149637403823164

##

## Ridge(alpha=0.09000000000000001)

## n: 7, alpha 0.09000000000000001

## Ridge train mae: 3.686285498881524

## Ridge test mae: 23.668271292252104

##

## Ridge(alpha=0.1)

## n: 7, alpha 0.1

## Ridge train mae: 3.6924817648873645

## Ridge test mae: 23.244456914649188

n=3

poly = PolynomialFeatures(degree=n, interaction_only = False)

X_poly_train = poly.fit_transform(X_train)

X_poly_test = poly.transform(X_test)

train_mae = []

test_mae = []

a_values = np.linspace(0.01, 0.1, 10)

for a in a_values:

model = Ridge(alpha=a)

model.fit(X_poly_train, y_train)

LR_train_mae = mae(model.predict(X_poly_train), y_train)

LR_test_mae = mae(model.predict(X_poly_test), y_test)

train_mae.append(LR_train_mae)

test_mae.append(LR_test_mae)

#print("n: {}, alpha {}".format(n, a))

#print("Ridge train mae: {}".format(LR_train_mae))

#print("Ridge test mae: {}".format(LR_test_mae))

#print("")## Ridge(alpha=0.01)

## Ridge(alpha=0.020000000000000004)

## Ridge(alpha=0.030000000000000006)

## Ridge(alpha=0.04000000000000001)

## Ridge(alpha=0.05000000000000001)

## Ridge(alpha=0.06000000000000001)

## Ridge(alpha=0.07)

## Ridge(alpha=0.08)

## Ridge(alpha=0.09000000000000001)

## Ridge(alpha=0.1)plt.plot(a_values, train_mae, label="train_mae")

plt.plot(a_values, test_mae, label="test_mae")

plt.xlabel("alpha")

plt.legend()Results from ridge regression show that the tuning parameter does not make a sustantial difference when using the polynomial of best fit. Indeed, ridge is effective when there is a high number of predictors and high collinearity. In this case, the highly collinear variables have been removed in the EDA, leaving only 3 predictors for out modelling process. As such, we will use neural networks to further reinforce our model.

3.6 Neural networks

Neural networks are extremely good at finding patterns in complex data such as images or sound. Neural networks create their own non-linear features through the process of backpropagation. Whilst neural networks are known for working well on complex homogenous data (images, sound, video), we are interested to see if they would work on a task such as this (tabular data).

import kerasclass Mul(keras.layers.Layer):

def __init__(self, val):

self.const = val

def call(self, inputs):

return inputs*self.constWe trial two model architectures: architecture one has two hidden layers of 10 and 5 nodes; architecture two has one hidden layer of 3 nodes. Each architecture was trained for 100 epochs, using an ADAM optimizer, and a loss function of mean squared error (however we continue to validate using MAE).

model = keras.models.Sequential()

model.add(keras.layers.Dense(10, input_dim=3, activation="relu"))

model.add(keras.layers.Dense(5, activation="relu"))

model.add(keras.layers.Dense(1, activation="relu"))

#model.add(keras.layers.Lambda(lambda x:x*78.3))

# the softmax puts the output of the network between 0 and 1.

# We need to multiple this by the max expected value to ensure the network outputs in the desired range

model.compile(loss="mse", optimizer="adam", metrics=["mae"])

history = model.fit(X_train, y_train, epochs=100, batch_size=1, validation_data=(X_test, y_test))

## Epoch 1/100

##

1/277 [..............................] - ETA: 2:44 - loss: 2470.0901 - mae: 49.7000

36/277 [==>...........................] - ETA: 0s - loss: 1585.0010 - mae: 37.5212

67/277 [======>.......................] - ETA: 0s - loss: 1523.6066 - mae: 36.8430

99/277 [=========>....................] - ETA: 0s - loss: 1594.0640 - mae: 37.6664

136/277 [=============>................] - ETA: 0s - loss: 1625.4529 - mae: 37.8797

172/277 [=================>............] - ETA: 0s - loss: 1626.1511 - mae: 37.9397

205/277 [=====================>........] - ETA: 0s - loss: 1613.7715 - mae: 37.7664

238/277 [========================>.....] - ETA: 0s - loss: 1636.7510 - mae: 38.0901

266/277 [===========================>..] - ETA: 0s - loss: 1639.4038 - mae: 38.1997

277/277 [==============================] - 1s 3ms/step - loss: 1623.0206 - mae: 37.9851 - val_loss: 1446.9401 - val_mae: 35.3607

## Epoch 2/100

##

1/277 [..............................] - ETA: 0s - loss: 104.0011 - mae: 10.1981

35/277 [==>...........................] - ETA: 0s - loss: 1531.8750 - mae: 35.6593

74/277 [=======>......................] - ETA: 0s - loss: 1493.2561 - mae: 35.6105

109/277 [==========>...................] - ETA: 0s - loss: 1377.2374 - mae: 34.1404

133/277 [=============>................] - ETA: 0s - loss: 1373.6099 - mae: 34.1585

152/277 [===============>..............] - ETA: 0s - loss: 1383.6621 - mae: 34.4774

171/277 [=================>............] - ETA: 0s - loss: 1390.2277 - mae: 34.6792

190/277 [===================>..........] - ETA: 0s - loss: 1359.4875 - mae: 34.2496

215/277 [======================>.......] - ETA: 0s - loss: 1312.6556 - mae: 33.6298

241/277 [=========================>....] - ETA: 0s - loss: 1337.4366 - mae: 33.9648

264/277 [===========================>..] - ETA: 0s - loss: 1297.0752 - mae: 33.3564

277/277 [==============================] - 1s 3ms/step - loss: 1282.7953 - mae: 33.1719 - val_loss: 882.3262 - val_mae: 26.1436

## Epoch 3/100

##

1/277 [..............................] - ETA: 1s - loss: 1814.3890 - mae: 42.5956

13/277 [>.............................] - ETA: 1s - loss: 945.6012 - mae: 27.6106

33/277 [==>...........................] - ETA: 0s - loss: 835.2387 - mae: 26.0366

62/277 [=====>........................] - ETA: 0s - loss: 820.8144 - mae: 25.9476

92/277 [========>.....................] - ETA: 0s - loss: 732.7256 - mae: 24.5281

124/277 [============>.................] - ETA: 0s - loss: 718.3583 - mae: 24.1829

155/277 [===============>..............] - ETA: 0s - loss: 690.1375 - mae: 23.3792

186/277 [===================>..........] - ETA: 0s - loss: 634.6744 - mae: 22.2206

213/277 [======================>.......] - ETA: 0s - loss: 609.5428 - mae: 21.5228

237/277 [========================>.....] - ETA: 0s - loss: 576.8354 - mae: 20.8218

260/277 [===========================>..] - ETA: 0s - loss: 539.7739 - mae: 19.9117

277/277 [==============================] - 1s 3ms/step - loss: 521.2773 - mae: 19.5483 - val_loss: 233.1032 - val_mae: 10.8795

## Epoch 4/100

##

1/277 [..............................] - ETA: 0s - loss: 109.3886 - mae: 10.4589

21/277 [=>............................] - ETA: 0s - loss: 234.5261 - mae: 13.6821

40/277 [===>..........................] - ETA: 0s - loss: 249.3173 - mae: 12.6337

55/277 [====>.........................] - ETA: 0s - loss: 208.8372 - mae: 11.4012

68/277 [======>.......................] - ETA: 0s - loss: 201.7417 - mae: 11.4719

80/277 [=======>......................] - ETA: 0s - loss: 182.4078 - mae: 10.9005

96/277 [=========>....................] - ETA: 0s - loss: 164.9253 - mae: 10.3065

111/277 [===========>..................] - ETA: 0s - loss: 152.6112 - mae: 9.8550

127/277 [============>.................] - ETA: 0s - loss: 149.2401 - mae: 9.8212

141/277 [==============>...............] - ETA: 0s - loss: 142.7501 - mae: 9.5647

156/277 [===============>..............] - ETA: 0s - loss: 138.8747 - mae: 9.5186

175/277 [=================>............] - ETA: 0s - loss: 132.9365 - mae: 9.3362

194/277 [====================>.........] - ETA: 0s - loss: 132.8639 - mae: 9.2847

212/277 [=====================>........] - ETA: 0s - loss: 127.0636 - mae: 9.0284

228/277 [=======================>......] - ETA: 0s - loss: 131.0988 - mae: 9.0690

243/277 [=========================>....] - ETA: 0s - loss: 126.1761 - mae: 8.8826

258/277 [==========================>...] - ETA: 0s - loss: 122.7879 - mae: 8.7811

273/277 [============================>.] - ETA: 0s - loss: 119.5142 - mae: 8.6710

277/277 [==============================] - 1s 4ms/step - loss: 117.9464 - mae: 8.5848 - val_loss: 115.0206 - val_mae: 7.4276

## Epoch 5/100

##

1/277 [..............................] - ETA: 0s - loss: 118.6164 - mae: 10.8911

22/277 [=>............................] - ETA: 0s - loss: 44.8535 - mae: 5.7210

43/277 [===>..........................] - ETA: 0s - loss: 72.3914 - mae: 6.7920

61/277 [=====>........................] - ETA: 0s - loss: 59.4439 - mae: 6.0624

82/277 [=======>......................] - ETA: 0s - loss: 55.3029 - mae: 5.8916

102/277 [==========>...................] - ETA: 0s - loss: 51.5058 - mae: 5.6803

122/277 [============>.................] - ETA: 0s - loss: 62.8848 - mae: 6.0548

143/277 [==============>...............] - ETA: 0s - loss: 57.9190 - mae: 5.8721

162/277 [================>.............] - ETA: 0s - loss: 56.2607 - mae: 5.7886

183/277 [==================>...........] - ETA: 0s - loss: 56.6964 - mae: 5.7589

204/277 [=====================>........] - ETA: 0s - loss: 56.3083 - mae: 5.7954

224/277 [=======================>......] - ETA: 0s - loss: 54.6258 - mae: 5.7146

243/277 [=========================>....] - ETA: 0s - loss: 60.9433 - mae: 5.9691

262/277 [===========================>..] - ETA: 0s - loss: 65.4843 - mae: 6.1153

277/277 [==============================] - 1s 4ms/step - loss: 71.0354 - mae: 6.2787 - val_loss: 100.2990 - val_mae: 6.7794

## Epoch 6/100

##

1/277 [..............................] - ETA: 0s - loss: 653.2460 - mae: 25.5587

23/277 [=>............................] - ETA: 0s - loss: 61.0698 - mae: 5.6561

45/277 [===>..........................] - ETA: 0s - loss: 81.1723 - mae: 6.5622

66/277 [======>.......................] - ETA: 0s - loss: 69.1783 - mae: 6.1926

86/277 [========>.....................] - ETA: 0s - loss: 104.9826 - mae: 7.4678

107/277 [==========>...................] - ETA: 0s - loss: 89.2531 - mae: 6.7937

128/277 [============>.................] - ETA: 0s - loss: 81.4578 - mae: 6.5079

149/277 [===============>..............] - ETA: 0s - loss: 84.5265 - mae: 6.5581

170/277 [=================>............] - ETA: 0s - loss: 79.2313 - mae: 6.4047

192/277 [===================>..........] - ETA: 0s - loss: 75.0745 - mae: 6.2491

217/277 [======================>.......] - ETA: 0s - loss: 70.9571 - mae: 6.0723

248/277 [=========================>....] - ETA: 0s - loss: 68.7197 - mae: 5.9260

277/277 [==============================] - 1s 3ms/step - loss: 64.7187 - mae: 5.7063 - val_loss: 94.7780 - val_mae: 6.4805

## Epoch 7/100

##

1/277 [..............................] - ETA: 0s - loss: 6.5656 - mae: 2.5623

36/277 [==>...........................] - ETA: 0s - loss: 103.5271 - mae: 7.1643

68/277 [======>.......................] - ETA: 0s - loss: 86.1765 - mae: 6.4793

103/277 [==========>...................] - ETA: 0s - loss: 88.9114 - mae: 6.5265

127/277 [============>.................] - ETA: 0s - loss: 84.3702 - mae: 6.2104

148/277 [===============>..............] - ETA: 0s - loss: 77.7900 - mae: 5.9906

166/277 [================>.............] - ETA: 0s - loss: 75.1909 - mae: 5.9270

185/277 [===================>..........] - ETA: 0s - loss: 71.4747 - mae: 5.8236

203/277 [====================>.........] - ETA: 0s - loss: 67.9380 - mae: 5.6970

225/277 [=======================>......] - ETA: 0s - loss: 65.9511 - mae: 5.6816

254/277 [==========================>...] - ETA: 0s - loss: 65.1944 - mae: 5.6518

277/277 [==============================] - 1s 3ms/step - loss: 63.3085 - mae: 5.5795 - val_loss: 93.6428 - val_mae: 6.4115

## Epoch 8/100

##

1/277 [..............................] - ETA: 0s - loss: 0.5929 - mae: 0.7700

37/277 [===>..........................] - ETA: 0s - loss: 68.9690 - mae: 6.0505

70/277 [======>.......................] - ETA: 0s - loss: 64.4171 - mae: 5.8620

101/277 [=========>....................] - ETA: 0s - loss: 58.3360 - mae: 5.5235

136/277 [=============>................] - ETA: 0s - loss: 59.0053 - mae: 5.2864

175/277 [=================>............] - ETA: 0s - loss: 56.6301 - mae: 5.2944

210/277 [=====================>........] - ETA: 0s - loss: 62.2523 - mae: 5.5200

248/277 [=========================>....] - ETA: 0s - loss: 65.2024 - mae: 5.6234

277/277 [==============================] - 1s 2ms/step - loss: 62.7366 - mae: 5.5280 - val_loss: 92.7976 - val_mae: 6.3701

## Epoch 9/100

##

1/277 [..............................] - ETA: 0s - loss: 54.6007 - mae: 7.3892

36/277 [==>...........................] - ETA: 0s - loss: 43.7004 - mae: 4.6326

72/277 [======>.......................] - ETA: 0s - loss: 55.9864 - mae: 5.5429

108/277 [==========>...................] - ETA: 0s - loss: 62.4096 - mae: 5.5233

131/277 [=============>................] - ETA: 0s - loss: 71.3928 - mae: 5.8135

159/277 [================>.............] - ETA: 0s - loss: 66.5445 - mae: 5.6706

189/277 [===================>..........] - ETA: 0s - loss: 67.8519 - mae: 5.8201

218/277 [======================>.......] - ETA: 0s - loss: 70.1414 - mae: 5.8063

245/277 [=========================>....] - ETA: 0s - loss: 66.9693 - mae: 5.6851

274/277 [============================>.] - ETA: 0s - loss: 62.2213 - mae: 5.4865

277/277 [==============================] - 1s 2ms/step - loss: 62.1602 - mae: 5.5044 - val_loss: 91.7074 - val_mae: 6.3057

## Epoch 10/100

##

1/277 [..............................] - ETA: 0s - loss: 7.3133 - mae: 2.7043

29/277 [==>...........................] - ETA: 0s - loss: 41.3270 - mae: 4.8764

61/277 [=====>........................] - ETA: 0s - loss: 41.5370 - mae: 4.4742

92/277 [========>.....................] - ETA: 0s - loss: 46.3510 - mae: 4.6970

120/277 [===========>..................] - ETA: 0s - loss: 65.7495 - mae: 5.3160

151/277 [===============>..............] - ETA: 0s - loss: 71.8047 - mae: 5.6798

185/277 [===================>..........] - ETA: 0s - loss: 72.1990 - mae: 5.7529

214/277 [======================>.......] - ETA: 0s - loss: 67.3413 - mae: 5.5899

239/277 [========================>.....] - ETA: 0s - loss: 63.4983 - mae: 5.4624

269/277 [============================>.] - ETA: 0s - loss: 62.8580 - mae: 5.5183

277/277 [==============================] - 1s 2ms/step - loss: 61.7240 - mae: 5.4706 - val_loss: 92.1252 - val_mae: 6.3121

## Epoch 11/100

##

1/277 [..............................] - ETA: 0s - loss: 10.2782 - mae: 3.2060

29/277 [==>...........................] - ETA: 0s - loss: 60.8942 - mae: 5.6027

57/277 [=====>........................] - ETA: 0s - loss: 46.8939 - mae: 4.9756

87/277 [========>.....................] - ETA: 0s - loss: 41.4603 - mae: 4.6299

113/277 [===========>..................] - ETA: 0s - loss: 42.0463 - mae: 4.7887

141/277 [==============>...............] - ETA: 0s - loss: 54.3815 - mae: 5.0250

176/277 [==================>...........] - ETA: 0s - loss: 59.9204 - mae: 5.3302

208/277 [=====================>........] - ETA: 0s - loss: 61.1359 - mae: 5.4754

244/277 [=========================>....] - ETA: 0s - loss: 61.2416 - mae: 5.3835

277/277 [==============================] - 1s 2ms/step - loss: 61.3575 - mae: 5.3898 - val_loss: 90.7678 - val_mae: 6.1978

## Epoch 12/100

##

1/277 [..............................] - ETA: 0s - loss: 24.6365 - mae: 4.9635

35/277 [==>...........................] - ETA: 0s - loss: 52.2816 - mae: 5.4727

73/277 [======>.......................] - ETA: 0s - loss: 66.0379 - mae: 5.7484

116/277 [===========>..................] - ETA: 0s - loss: 58.8523 - mae: 5.2968

155/277 [===============>..............] - ETA: 0s - loss: 55.6930 - mae: 5.2226

192/277 [===================>..........] - ETA: 0s - loss: 59.6112 - mae: 5.3930

229/277 [=======================>......] - ETA: 0s - loss: 63.3024 - mae: 5.5597

264/277 [===========================>..] - ETA: 0s - loss: 60.0648 - mae: 5.3648

277/277 [==============================] - 1s 2ms/step - loss: 61.3264 - mae: 5.4117 - val_loss: 90.3098 - val_mae: 6.1619

## Epoch 13/100

##

1/277 [..............................] - ETA: 0s - loss: 15.0427 - mae: 3.8785

45/277 [===>..........................] - ETA: 0s - loss: 59.3843 - mae: 5.6037

86/277 [========>.....................] - ETA: 0s - loss: 59.2491 - mae: 5.3261

125/277 [============>.................] - ETA: 0s - loss: 63.2347 - mae: 5.3836

166/277 [================>.............] - ETA: 0s - loss: 64.6308 - mae: 5.5330

199/277 [====================>.........] - ETA: 0s - loss: 60.1450 - mae: 5.3708

237/277 [========================>.....] - ETA: 0s - loss: 60.8867 - mae: 5.3520

277/277 [==============================] - 0s 2ms/step - loss: 60.9800 - mae: 5.4448 - val_loss: 90.8200 - val_mae: 6.1889

## Epoch 14/100

##

1/277 [..............................] - ETA: 0s - loss: 175.9592 - mae: 13.2650

36/277 [==>...........................] - ETA: 0s - loss: 72.1883 - mae: 5.5733

72/277 [======>.......................] - ETA: 0s - loss: 65.5976 - mae: 5.6126

110/277 [==========>...................] - ETA: 0s - loss: 59.5437 - mae: 5.3231

148/277 [===============>..............] - ETA: 0s - loss: 62.2674 - mae: 5.2912

186/277 [===================>..........] - ETA: 0s - loss: 58.3871 - mae: 5.2528

226/277 [=======================>......] - ETA: 0s - loss: 62.3106 - mae: 5.4952

263/277 [===========================>..] - ETA: 0s - loss: 60.0152 - mae: 5.4344

277/277 [==============================] - 1s 2ms/step - loss: 61.0885 - mae: 5.4398 - val_loss: 91.6540 - val_mae: 6.2739

## Epoch 15/100

##

1/277 [..............................] - ETA: 0s - loss: 42.4804 - mae: 6.5177

41/277 [===>..........................] - ETA: 0s - loss: 65.2598 - mae: 6.0085

82/277 [=======>......................] - ETA: 0s - loss: 85.7180 - mae: 6.3553

121/277 [============>.................] - ETA: 0s - loss: 72.4970 - mae: 5.9901

158/277 [================>.............] - ETA: 0s - loss: 62.6036 - mae: 5.5116

197/277 [====================>.........] - ETA: 0s - loss: 60.5060 - mae: 5.4875

235/277 [========================>.....] - ETA: 0s - loss: 56.1341 - mae: 5.2914

271/277 [============================>.] - ETA: 0s - loss: 60.1978 - mae: 5.3915

277/277 [==============================] - 1s 2ms/step - loss: 60.8460 - mae: 5.3953 - val_loss: 90.1211 - val_mae: 6.1333

## Epoch 16/100

##

1/277 [..............................] - ETA: 0s - loss: 97.2016 - mae: 9.8591

36/277 [==>...........................] - ETA: 0s - loss: 56.2285 - mae: 5.3438

72/277 [======>.......................] - ETA: 0s - loss: 52.3105 - mae: 5.2124

103/277 [==========>...................] - ETA: 0s - loss: 62.4597 - mae: 5.4999

132/277 [=============>................] - ETA: 0s - loss: 54.8259 - mae: 5.1714

170/277 [=================>............] - ETA: 0s - loss: 53.2260 - mae: 5.0451

201/277 [====================>.........] - ETA: 0s - loss: 58.0763 - mae: 5.3524

229/277 [=======================>......] - ETA: 0s - loss: 62.8855 - mae: 5.4549

262/277 [===========================>..] - ETA: 0s - loss: 61.1940 - mae: 5.4056

277/277 [==============================] - 1s 2ms/step - loss: 60.7129 - mae: 5.4128 - val_loss: 89.7553 - val_mae: 6.1455

## Epoch 17/100

##

1/277 [..............................] - ETA: 0s - loss: 54.7216 - mae: 7.3974

40/277 [===>..........................] - ETA: 0s - loss: 50.2469 - mae: 4.7798

81/277 [=======>......................] - ETA: 0s - loss: 66.3035 - mae: 5.8130

115/277 [===========>..................] - ETA: 0s - loss: 66.9770 - mae: 5.5458

152/277 [===============>..............] - ETA: 0s - loss: 61.8836 - mae: 5.3948

190/277 [===================>..........] - ETA: 0s - loss: 57.2526 - mae: 5.2742

223/277 [=======================>......] - ETA: 0s - loss: 55.0103 - mae: 5.1093

258/277 [==========================>...] - ETA: 0s - loss: 53.3162 - mae: 5.1144

277/277 [==============================] - 1s 2ms/step - loss: 60.1900 - mae: 5.3987 - val_loss: 91.2614 - val_mae: 6.2485

## Epoch 18/100

##

1/277 [..............................] - ETA: 0s - loss: 0.0203 - mae: 0.1426

37/277 [===>..........................] - ETA: 0s - loss: 82.3757 - mae: 5.8962

74/277 [=======>......................] - ETA: 0s - loss: 77.6830 - mae: 6.2317

107/277 [==========>...................] - ETA: 0s - loss: 70.7317 - mae: 5.9849

142/277 [==============>...............] - ETA: 0s - loss: 70.4760 - mae: 5.8807

176/277 [==================>...........] - ETA: 0s - loss: 65.6402 - mae: 5.7470

210/277 [=====================>........] - ETA: 0s - loss: 68.4053 - mae: 5.7848

251/277 [==========================>...] - ETA: 0s - loss: 62.9046 - mae: 5.5297

277/277 [==============================] - 1s 2ms/step - loss: 60.1262 - mae: 5.4036 - val_loss: 90.8319 - val_mae: 6.1611

## Epoch 19/100

##

1/277 [..............................] - ETA: 0s - loss: 62.3051 - mae: 7.8934

35/277 [==>...........................] - ETA: 0s - loss: 52.2037 - mae: 5.7344

71/277 [======>.......................] - ETA: 0s - loss: 95.5340 - mae: 6.8965

111/277 [===========>..................] - ETA: 0s - loss: 75.9115 - mae: 6.2099

152/277 [===============>..............] - ETA: 0s - loss: 72.9653 - mae: 5.8805

191/277 [===================>..........] - ETA: 0s - loss: 63.2571 - mae: 5.4172

235/277 [========================>.....] - ETA: 0s - loss: 61.0962 - mae: 5.3628

275/277 [============================>.] - ETA: 0s - loss: 58.3668 - mae: 5.2810

277/277 [==============================] - 1s 2ms/step - loss: 59.8911 - mae: 5.3490 - val_loss: 91.0848 - val_mae: 6.1839

## Epoch 20/100

##

1/277 [..............................] - ETA: 0s - loss: 19.3275 - mae: 4.3963

43/277 [===>..........................] - ETA: 0s - loss: 99.3543 - mae: 6.2296

80/277 [=======>......................] - ETA: 0s - loss: 69.4257 - mae: 5.2170

120/277 [===========>..................] - ETA: 0s - loss: 68.3062 - mae: 5.4812

158/277 [================>.............] - ETA: 0s - loss: 63.4270 - mae: 5.3295

197/277 [====================>.........] - ETA: 0s - loss: 63.8321 - mae: 5.4434

237/277 [========================>.....] - ETA: 0s - loss: 60.9118 - mae: 5.4631

273/277 [============================>.] - ETA: 0s - loss: 60.2109 - mae: 5.3721

277/277 [==============================] - 1s 2ms/step - loss: 59.8212 - mae: 5.3750 - val_loss: 89.3640 - val_mae: 6.0898

## Epoch 21/100

##

1/277 [..............................] - ETA: 0s - loss: 34.7606 - mae: 5.8958

36/277 [==>...........................] - ETA: 0s - loss: 91.0651 - mae: 6.9560

68/277 [======>.......................] - ETA: 0s - loss: 72.4344 - mae: 6.0508

104/277 [==========>...................] - ETA: 0s - loss: 68.4009 - mae: 5.9631

140/277 [==============>...............] - ETA: 0s - loss: 70.3115 - mae: 5.8633

179/277 [==================>...........] - ETA: 0s - loss: 59.7695 - mae: 5.3957

218/277 [======================>.......] - ETA: 0s - loss: 58.7465 - mae: 5.3488

249/277 [=========================>....] - ETA: 0s - loss: 60.7046 - mae: 5.4464

277/277 [==============================] - 1s 2ms/step - loss: 59.7461 - mae: 5.3951 - val_loss: 89.5464 - val_mae: 6.0995

## Epoch 22/100

##

1/277 [..............................] - ETA: 0s - loss: 256.8375 - mae: 16.0262

40/277 [===>..........................] - ETA: 0s - loss: 98.2784 - mae: 6.5195

74/277 [=======>......................] - ETA: 0s - loss: 81.0695 - mae: 6.1099

107/277 [==========>...................] - ETA: 0s - loss: 68.4407 - mae: 5.6434

129/277 [============>.................] - ETA: 0s - loss: 64.3109 - mae: 5.5475

146/277 [==============>...............] - ETA: 0s - loss: 62.3588 - mae: 5.4912

165/277 [================>.............] - ETA: 0s - loss: 57.8265 - mae: 5.2457

183/277 [==================>...........] - ETA: 0s - loss: 53.7525 - mae: 5.0442

201/277 [====================>.........] - ETA: 0s - loss: 58.6180 - mae: 5.1358

223/277 [=======================>......] - ETA: 0s - loss: 63.4442 - mae: 5.4245

247/277 [=========================>....] - ETA: 0s - loss: 61.8944 - mae: 5.3978

277/277 [==============================] - 1s 3ms/step - loss: 59.1370 - mae: 5.3011 - val_loss: 89.5793 - val_mae: 6.0811

## Epoch 23/100

##

1/277 [..............................] - ETA: 0s - loss: 119.4586 - mae: 10.9297

23/277 [=>............................] - ETA: 0s - loss: 22.9989 - mae: 3.3281

39/277 [===>..........................] - ETA: 0s - loss: 47.0211 - mae: 4.5439

60/277 [=====>........................] - ETA: 0s - loss: 61.7966 - mae: 5.2480

87/277 [========>.....................] - ETA: 0s - loss: 64.3730 - mae: 5.4797

122/277 [============>.................] - ETA: 0s - loss: 76.8154 - mae: 5.9115

166/277 [================>.............] - ETA: 0s - loss: 68.4972 - mae: 5.7618

206/277 [=====================>........] - ETA: 0s - loss: 62.9064 - mae: 5.5419

234/277 [========================>.....] - ETA: 0s - loss: 57.8920 - mae: 5.3171

267/277 [===========================>..] - ETA: 0s - loss: 55.1272 - mae: 5.2727

277/277 [==============================] - 1s 2ms/step - loss: 58.9538 - mae: 5.3448 - val_loss: 91.0750 - val_mae: 6.1820

## Epoch 24/100

##

1/277 [..............................] - ETA: 0s - loss: 31.7153 - mae: 5.6316

31/277 [==>...........................] - ETA: 0s - loss: 43.2026 - mae: 5.3250

65/277 [======>.......................] - ETA: 0s - loss: 64.3729 - mae: 5.7123

104/277 [==========>...................] - ETA: 0s - loss: 58.1731 - mae: 5.4159

139/277 [==============>...............] - ETA: 0s - loss: 51.1329 - mae: 5.1329

167/277 [=================>............] - ETA: 0s - loss: 55.3936 - mae: 5.2593

188/277 [===================>..........] - ETA: 0s - loss: 60.0168 - mae: 5.4715

197/277 [====================>.........] - ETA: 0s - loss: 58.2379 - mae: 5.4073

205/277 [=====================>........] - ETA: 0s - loss: 58.3833 - mae: 5.3536

212/277 [=====================>........] - ETA: 0s - loss: 57.2453 - mae: 5.3020

233/277 [========================>.....] - ETA: 0s - loss: 60.9489 - mae: 5.3572

262/277 [===========================>..] - ETA: 0s - loss: 60.5837 - mae: 5.4116

277/277 [==============================] - 1s 3ms/step - loss: 59.3487 - mae: 5.3564 - val_loss: 89.3995 - val_mae: 6.0709

## Epoch 25/100

##

1/277 [..............................] - ETA: 0s - loss: 36.4818 - mae: 6.0400

29/277 [==>...........................] - ETA: 0s - loss: 51.9547 - mae: 5.3191

60/277 [=====>........................] - ETA: 0s - loss: 46.0376 - mae: 5.0957

95/277 [=========>....................] - ETA: 0s - loss: 49.9508 - mae: 5.4289

129/277 [============>.................] - ETA: 0s - loss: 50.0154 - mae: 5.1632

164/277 [================>.............] - ETA: 0s - loss: 51.4975 - mae: 5.2739

201/277 [====================>.........] - ETA: 0s - loss: 60.4849 - mae: 5.4632

232/277 [========================>.....] - ETA: 0s - loss: 57.8704 - mae: 5.3091

261/277 [===========================>..] - ETA: 0s - loss: 54.9090 - mae: 5.2396

277/277 [==============================] - 1s 2ms/step - loss: 59.4867 - mae: 5.3693 - val_loss: 89.1353 - val_mae: 6.0562

## Epoch 26/100

##

1/277 [..............................] - ETA: 0s - loss: 35.8095 - mae: 5.9841

36/277 [==>...........................] - ETA: 0s - loss: 25.8798 - mae: 3.8250

67/277 [======>.......................] - ETA: 0s - loss: 50.6494 - mae: 5.2447

93/277 [=========>....................] - ETA: 0s - loss: 56.5472 - mae: 5.5118

125/277 [============>.................] - ETA: 0s - loss: 51.0360 - mae: 5.2480

160/277 [================>.............] - ETA: 0s - loss: 57.2452 - mae: 5.4617

200/277 [====================>.........] - ETA: 0s - loss: 57.4949 - mae: 5.3369

239/277 [========================>.....] - ETA: 0s - loss: 61.0452 - mae: 5.3933

273/277 [============================>.] - ETA: 0s - loss: 58.9758 - mae: 5.3260

277/277 [==============================] - 1s 2ms/step - loss: 58.6831 - mae: 5.3213 - val_loss: 88.3136 - val_mae: 6.0345

## Epoch 27/100

##

1/277 [..............................] - ETA: 0s - loss: 57.0344 - mae: 7.5521

39/277 [===>..........................] - ETA: 0s - loss: 40.1164 - mae: 4.2888

78/277 [=======>......................] - ETA: 0s - loss: 71.4417 - mae: 5.5400

106/277 [==========>...................] - ETA: 0s - loss: 65.0630 - mae: 5.4685

127/277 [============>.................] - ETA: 0s - loss: 75.3395 - mae: 5.8089

144/277 [==============>...............] - ETA: 0s - loss: 69.6403 - mae: 5.5999

162/277 [================>.............] - ETA: 0s - loss: 65.4892 - mae: 5.4603

185/277 [===================>..........] - ETA: 0s - loss: 64.2270 - mae: 5.4575

218/277 [======================>.......] - ETA: 0s - loss: 64.8965 - mae: 5.6066

257/277 [==========================>...] - ETA: 0s - loss: 60.7612 - mae: 5.4032

277/277 [==============================] - 1s 2ms/step - loss: 58.5898 - mae: 5.3026 - val_loss: 90.5190 - val_mae: 6.1478

## Epoch 28/100

##

1/277 [..............................] - ETA: 0s - loss: 42.8865 - mae: 6.5488

42/277 [===>..........................] - ETA: 0s - loss: 44.5865 - mae: 4.9140

81/277 [=======>......................] - ETA: 0s - loss: 67.1038 - mae: 5.7655

113/277 [===========>..................] - ETA: 0s - loss: 72.0104 - mae: 5.8125

146/277 [==============>...............] - ETA: 0s - loss: 67.3980 - mae: 5.6549

184/277 [==================>...........] - ETA: 0s - loss: 61.3175 - mae: 5.3768

221/277 [======================>.......] - ETA: 0s - loss: 61.2513 - mae: 5.2983

256/277 [==========================>...] - ETA: 0s - loss: 60.1071 - mae: 5.2829

277/277 [==============================] - 1s 2ms/step - loss: 58.8507 - mae: 5.2839 - val_loss: 88.5909 - val_mae: 6.0390

## Epoch 29/100

##

1/277 [..............................] - ETA: 0s - loss: 72.8576 - mae: 8.5357

40/277 [===>..........................] - ETA: 0s - loss: 29.8168 - mae: 4.3446

78/277 [=======>......................] - ETA: 0s - loss: 65.5703 - mae: 5.4785

110/277 [==========>...................] - ETA: 0s - loss: 60.8986 - mae: 5.4456

142/277 [==============>...............] - ETA: 0s - loss: 56.8951 - mae: 5.3133

177/277 [==================>...........] - ETA: 0s - loss: 55.3426 - mae: 5.1852

213/277 [======================>.......] - ETA: 0s - loss: 55.7008 - mae: 5.0816

247/277 [=========================>....] - ETA: 0s - loss: 55.2233 - mae: 5.1567

277/277 [==============================] - 1s 2ms/step - loss: 58.2525 - mae: 5.2989 - val_loss: 89.6183 - val_mae: 6.1004

## Epoch 30/100

##

1/277 [..............................] - ETA: 0s - loss: 92.8178 - mae: 9.6342

38/277 [===>..........................] - ETA: 0s - loss: 49.8727 - mae: 4.9221

69/277 [======>.......................] - ETA: 0s - loss: 48.5909 - mae: 5.0138

99/277 [=========>....................] - ETA: 0s - loss: 54.9908 - mae: 5.4460

131/277 [=============>................] - ETA: 0s - loss: 55.2166 - mae: 5.4156

171/277 [=================>............] - ETA: 0s - loss: 52.7007 - mae: 5.2902

205/277 [=====================>........] - ETA: 0s - loss: 48.4763 - mae: 5.0614

241/277 [=========================>....] - ETA: 0s - loss: 54.6512 - mae: 5.1771

277/277 [==============================] - 1s 2ms/step - loss: 58.5629 - mae: 5.2766 - val_loss: 87.7345 - val_mae: 5.9872

## Epoch 31/100

##

1/277 [..............................] - ETA: 0s - loss: 0.0438 - mae: 0.2092

38/277 [===>..........................] - ETA: 0s - loss: 67.3844 - mae: 5.2404

74/277 [=======>......................] - ETA: 0s - loss: 67.5295 - mae: 5.5562

113/277 [===========>..................] - ETA: 0s - loss: 59.8469 - mae: 5.4165

149/277 [===============>..............] - ETA: 0s - loss: 56.2368 - mae: 5.3624

185/277 [===================>..........] - ETA: 0s - loss: 53.9630 - mae: 5.2204

222/277 [=======================>......] - ETA: 0s - loss: 50.4345 - mae: 5.1128

256/277 [==========================>...] - ETA: 0s - loss: 55.5067 - mae: 5.2534

277/277 [==============================] - 1s 2ms/step - loss: 58.4518 - mae: 5.3031 - val_loss: 88.0219 - val_mae: 5.9975

## Epoch 32/100

##

1/277 [..............................] - ETA: 0s - loss: 17.7840 - mae: 4.2171

38/277 [===>..........................] - ETA: 0s - loss: 71.4815 - mae: 5.6563

76/277 [=======>......................] - ETA: 0s - loss: 55.4132 - mae: 5.1470

117/277 [===========>..................] - ETA: 0s - loss: 58.2147 - mae: 5.3634

153/277 [===============>..............] - ETA: 0s - loss: 55.0822 - mae: 5.1295

188/277 [===================>..........] - ETA: 0s - loss: 51.8501 - mae: 5.0225

227/277 [=======================>......] - ETA: 0s - loss: 56.1524 - mae: 5.2075

264/277 [===========================>..] - ETA: 0s - loss: 59.4908 - mae: 5.3261

277/277 [==============================] - 1s 2ms/step - loss: 58.1015 - mae: 5.2719 - val_loss: 87.7934 - val_mae: 5.9897

## Epoch 33/100

##

1/277 [..............................] - ETA: 0s - loss: 4.4875 - mae: 2.1184

38/277 [===>..........................] - ETA: 0s - loss: 77.2530 - mae: 5.9930

76/277 [=======>......................] - ETA: 0s - loss: 70.7140 - mae: 6.0912

113/277 [===========>..................] - ETA: 0s - loss: 63.5410 - mae: 5.8383

153/277 [===============>..............] - ETA: 0s - loss: 63.0702 - mae: 5.5884

189/277 [===================>..........] - ETA: 0s - loss: 57.5962 - mae: 5.2922

224/277 [=======================>......] - ETA: 0s - loss: 60.0948 - mae: 5.3641

264/277 [===========================>..] - ETA: 0s - loss: 58.0438 - mae: 5.2308

277/277 [==============================] - 1s 2ms/step - loss: 58.4764 - mae: 5.2832 - val_loss: 88.1504 - val_mae: 6.0189

## Epoch 34/100

##

1/277 [..............................] - ETA: 0s - loss: 77.0801 - mae: 8.7795

36/277 [==>...........................] - ETA: 0s - loss: 86.2519 - mae: 6.3250

80/277 [=======>......................] - ETA: 0s - loss: 64.8239 - mae: 5.4511

118/277 [===========>..................] - ETA: 0s - loss: 57.4465 - mae: 5.2454

154/277 [===============>..............] - ETA: 0s - loss: 53.9281 - mae: 5.1715

191/277 [===================>..........] - ETA: 0s - loss: 55.0015 - mae: 5.1016

224/277 [=======================>......] - ETA: 0s - loss: 54.4042 - mae: 5.1032

260/277 [===========================>..] - ETA: 0s - loss: 58.0165 - mae: 5.2565

277/277 [==============================] - 1s 2ms/step - loss: 57.3072 - mae: 5.2990 - val_loss: 87.6568 - val_mae: 5.9852

## Epoch 35/100

##

1/277 [..............................] - ETA: 0s - loss: 35.4942 - mae: 5.9577

37/277 [===>..........................] - ETA: 0s - loss: 44.8586 - mae: 5.1091

75/277 [=======>......................] - ETA: 0s - loss: 44.9240 - mae: 5.0881

113/277 [===========>..................] - ETA: 0s - loss: 52.9135 - mae: 5.1395

152/277 [===============>..............] - ETA: 0s - loss: 55.3328 - mae: 5.1605

190/277 [===================>..........] - ETA: 0s - loss: 55.6933 - mae: 5.0912

229/277 [=======================>......] - ETA: 0s - loss: 61.0110 - mae: 5.2694

266/277 [===========================>..] - ETA: 0s - loss: 58.6089 - mae: 5.2178

277/277 [==============================] - 1s 2ms/step - loss: 57.7776 - mae: 5.1971 - val_loss: 88.8243 - val_mae: 6.0579

## Epoch 36/100

##

1/277 [..............................] - ETA: 0s - loss: 134.4067 - mae: 11.5934

36/277 [==>...........................] - ETA: 0s - loss: 73.3329 - mae: 6.1095

77/277 [=======>......................] - ETA: 0s - loss: 67.1707 - mae: 6.0441

114/277 [===========>..................] - ETA: 0s - loss: 60.9414 - mae: 5.6028

147/277 [==============>...............] - ETA: 0s - loss: 69.7676 - mae: 5.7793

180/277 [==================>...........] - ETA: 0s - loss: 69.2524 - mae: 5.5959

211/277 [=====================>........] - ETA: 0s - loss: 64.6170 - mae: 5.4247

244/277 [=========================>....] - ETA: 0s - loss: 59.3909 - mae: 5.2357

277/277 [==============================] - 1s 2ms/step - loss: 57.9905 - mae: 5.2495 - val_loss: 87.6656 - val_mae: 5.9798

## Epoch 37/100

##

1/277 [..............................] - ETA: 0s - loss: 0.6557 - mae: 0.8097

32/277 [==>...........................] - ETA: 0s - loss: 77.1869 - mae: 5.7527

57/277 [=====>........................] - ETA: 0s - loss: 63.6444 - mae: 5.5380

85/277 [========>.....................] - ETA: 0s - loss: 60.2226 - mae: 5.5078

116/277 [===========>..................] - ETA: 0s - loss: 51.3104 - mae: 5.1392

146/277 [==============>...............] - ETA: 0s - loss: 48.3470 - mae: 4.9202

175/277 [=================>............] - ETA: 0s - loss: 48.9210 - mae: 4.9231

206/277 [=====================>........] - ETA: 0s - loss: 58.1464 - mae: 5.2607

236/277 [========================>.....] - ETA: 0s - loss: 60.2096 - mae: 5.2888

265/277 [===========================>..] - ETA: 0s - loss: 59.9013 - mae: 5.3601

277/277 [==============================] - 1s 2ms/step - loss: 57.8075 - mae: 5.2505 - val_loss: 87.8749 - val_mae: 5.9877

## Epoch 38/100

##

1/277 [..............................] - ETA: 0s - loss: 8.1752 - mae: 2.8592

34/277 [==>...........................] - ETA: 0s - loss: 36.2864 - mae: 4.7459

66/277 [======>.......................] - ETA: 0s - loss: 60.0328 - mae: 5.6246

95/277 [=========>....................] - ETA: 0s - loss: 62.9563 - mae: 5.4820

124/277 [============>.................] - ETA: 0s - loss: 64.5519 - mae: 5.5681

151/277 [===============>..............] - ETA: 0s - loss: 60.4609 - mae: 5.4119

180/277 [==================>...........] - ETA: 0s - loss: 63.6329 - mae: 5.4290

212/277 [=====================>........] - ETA: 0s - loss: 60.7366 - mae: 5.3604

242/277 [=========================>....] - ETA: 0s - loss: 58.1808 - mae: 5.2674

273/277 [============================>.] - ETA: 0s - loss: 57.5158 - mae: 5.2764

277/277 [==============================] - 1s 2ms/step - loss: 57.6881 - mae: 5.2946 - val_loss: 87.7492 - val_mae: 5.9794

## Epoch 39/100

##

1/277 [..............................] - ETA: 0s - loss: 1.7220 - mae: 1.3122

38/277 [===>..........................] - ETA: 0s - loss: 40.1984 - mae: 4.7020

76/277 [=======>......................] - ETA: 0s - loss: 61.6157 - mae: 5.3561

111/277 [===========>..................] - ETA: 0s - loss: 69.2233 - mae: 5.3600

148/277 [===============>..............] - ETA: 0s - loss: 61.1794 - mae: 5.2194

190/277 [===================>..........] - ETA: 0s - loss: 60.5657 - mae: 5.2752

230/277 [=======================>......] - ETA: 0s - loss: 59.2432 - mae: 5.2733

267/277 [===========================>..] - ETA: 0s - loss: 57.1532 - mae: 5.1882

277/277 [==============================] - 1s 2ms/step - loss: 57.5092 - mae: 5.2359 - val_loss: 88.6470 - val_mae: 6.0484

## Epoch 40/100

##

1/277 [..............................] - ETA: 0s - loss: 17.6898 - mae: 4.2059

33/277 [==>...........................] - ETA: 0s - loss: 57.0082 - mae: 5.2721

70/277 [======>.......................] - ETA: 0s - loss: 48.9822 - mae: 4.9874

105/277 [==========>...................] - ETA: 0s - loss: 68.0196 - mae: 5.4906

141/277 [==============>...............] - ETA: 0s - loss: 60.2327 - mae: 5.2526

176/277 [==================>...........] - ETA: 0s - loss: 60.3131 - mae: 5.2668

201/277 [====================>.........] - ETA: 0s - loss: 59.4042 - mae: 5.3354

229/277 [=======================>......] - ETA: 0s - loss: 56.8381 - mae: 5.2320

263/277 [===========================>..] - ETA: 0s - loss: 58.9363 - mae: 5.3113

277/277 [==============================] - 1s 2ms/step - loss: 57.4515 - mae: 5.2653 - val_loss: 88.2045 - val_mae: 6.0193

## Epoch 41/100

##

1/277 [..............................] - ETA: 0s - loss: 11.2698 - mae: 3.3570

40/277 [===>..........................] - ETA: 0s - loss: 54.1507 - mae: 5.3540

77/277 [=======>......................] - ETA: 0s - loss: 58.4281 - mae: 4.9964

113/277 [===========>..................] - ETA: 0s - loss: 65.1351 - mae: 5.3062

152/277 [===============>..............] - ETA: 0s - loss: 57.1780 - mae: 4.9819

195/277 [====================>.........] - ETA: 0s - loss: 57.8602 - mae: 5.2243

231/277 [========================>.....] - ETA: 0s - loss: 62.7489 - mae: 5.5072

266/277 [===========================>..] - ETA: 0s - loss: 59.2836 - mae: 5.3499

277/277 [==============================] - 1s 2ms/step - loss: 57.4513 - mae: 5.2501 - val_loss: 87.0614 - val_mae: 5.9509

## Epoch 42/100

##

1/277 [..............................] - ETA: 0s - loss: 9.7035 - mae: 3.1150

39/277 [===>..........................] - ETA: 0s - loss: 51.2894 - mae: 5.6680

77/277 [=======>......................] - ETA: 0s - loss: 80.4619 - mae: 6.3475

108/277 [==========>...................] - ETA: 0s - loss: 67.5197 - mae: 5.6747